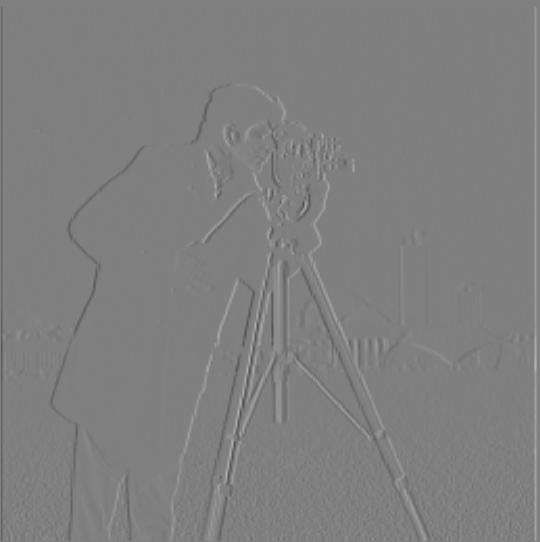

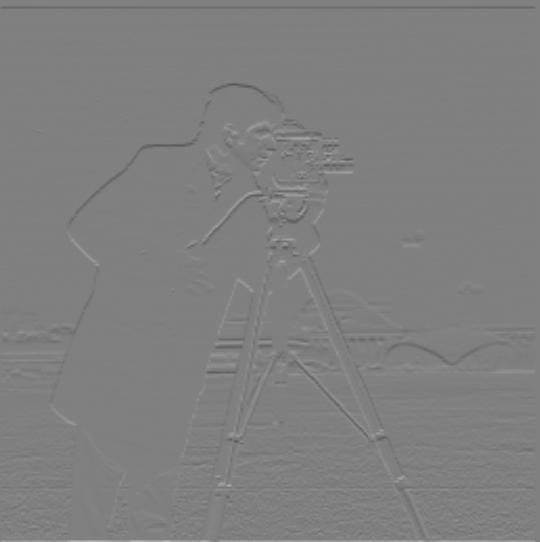

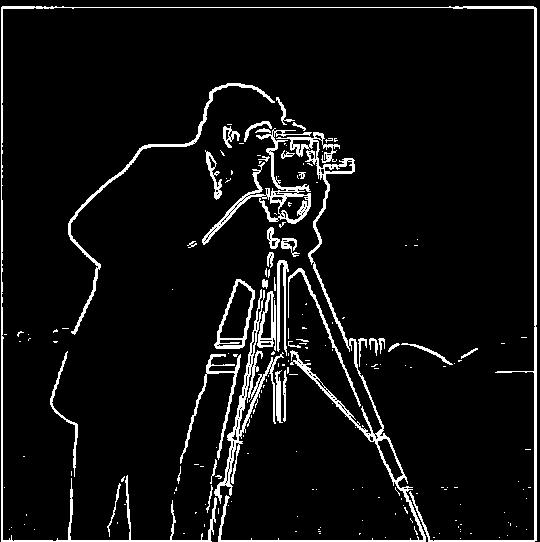

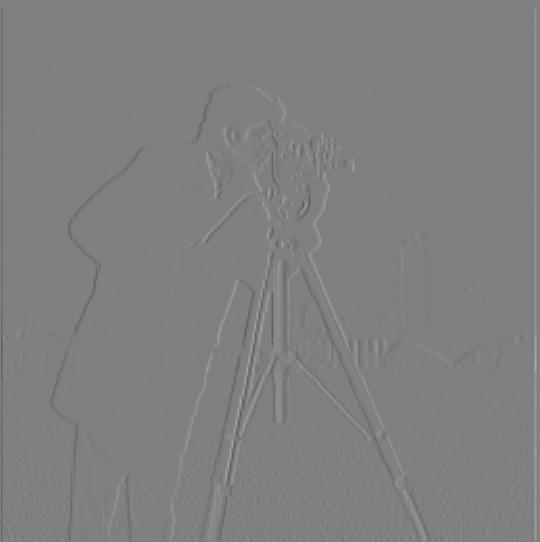

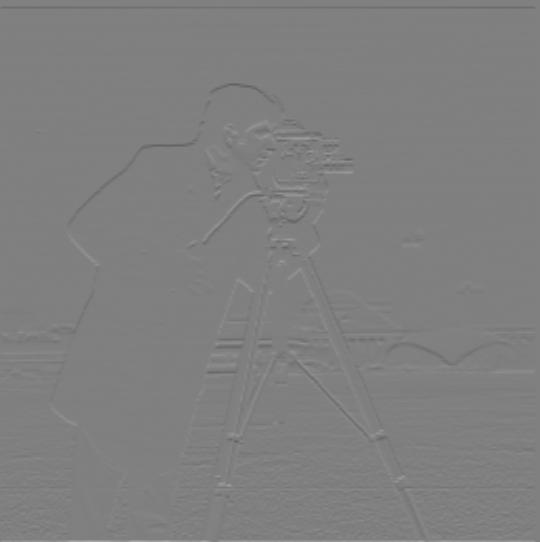

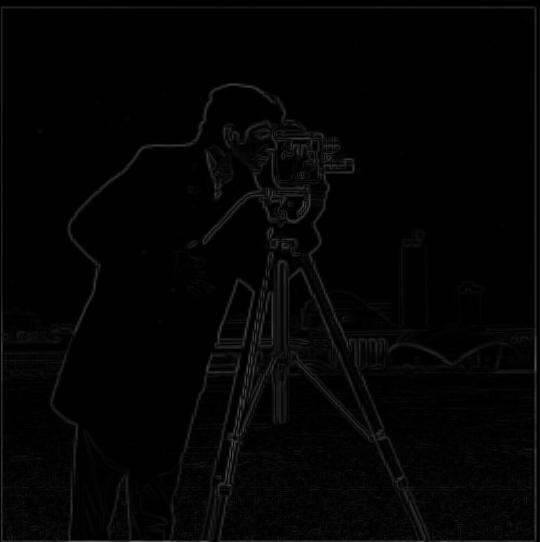

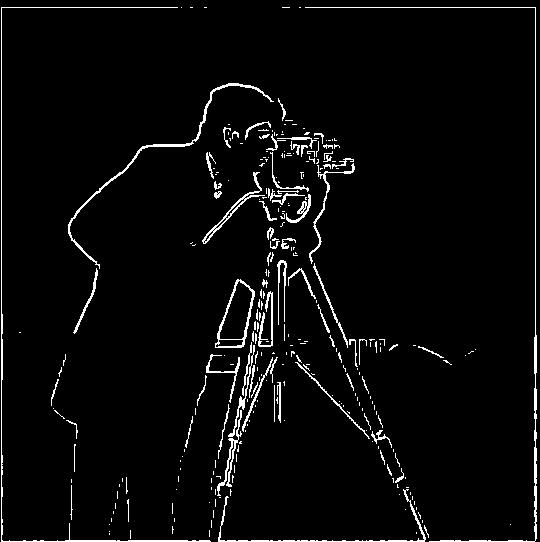

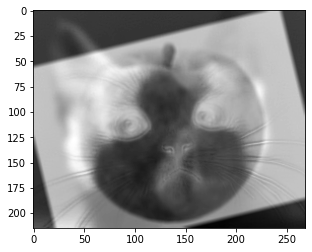

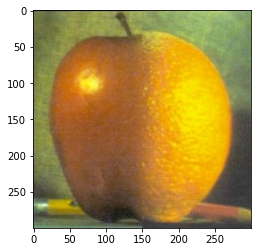

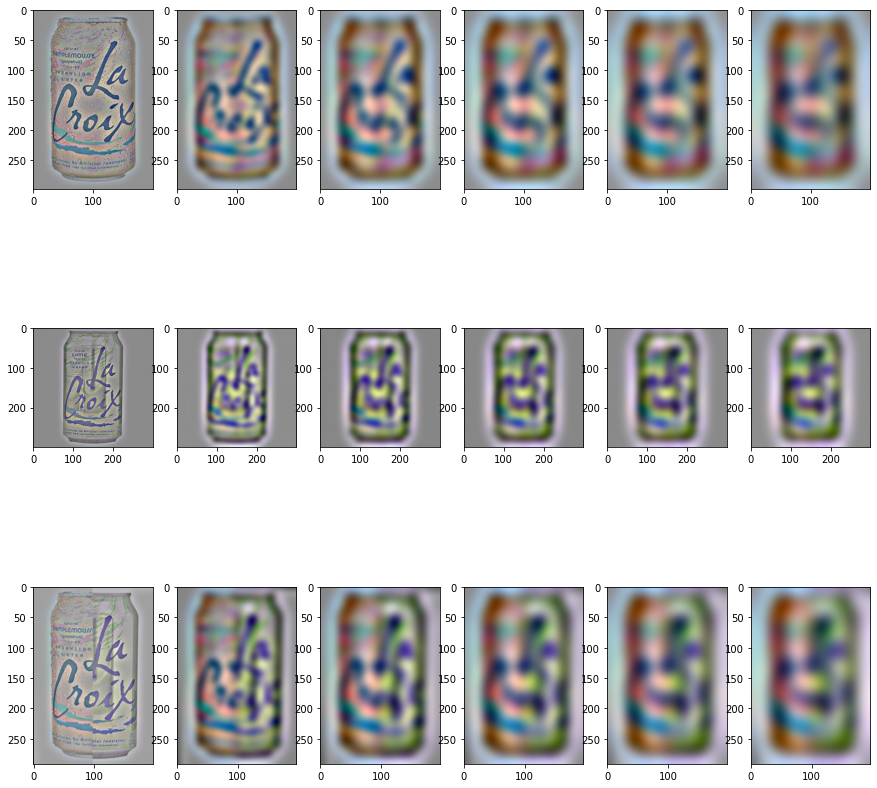

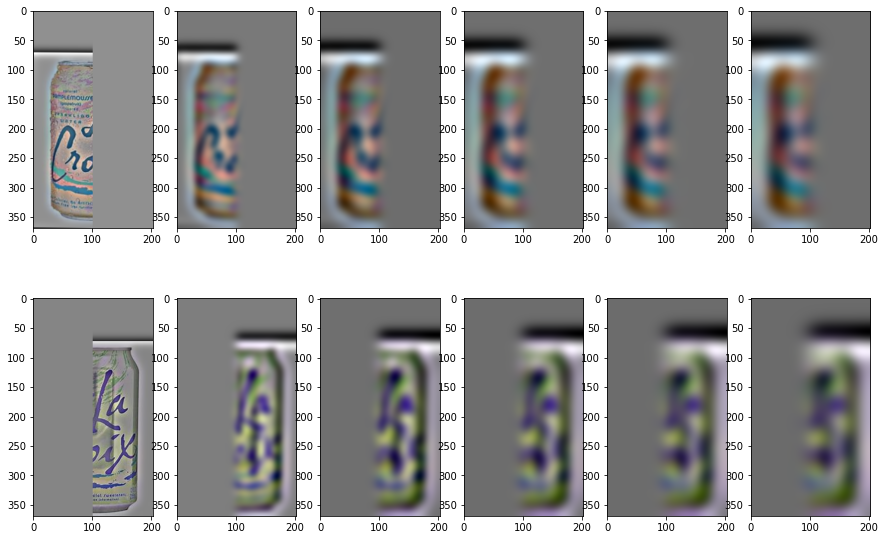

Part 1.1: Finite Difference Operator

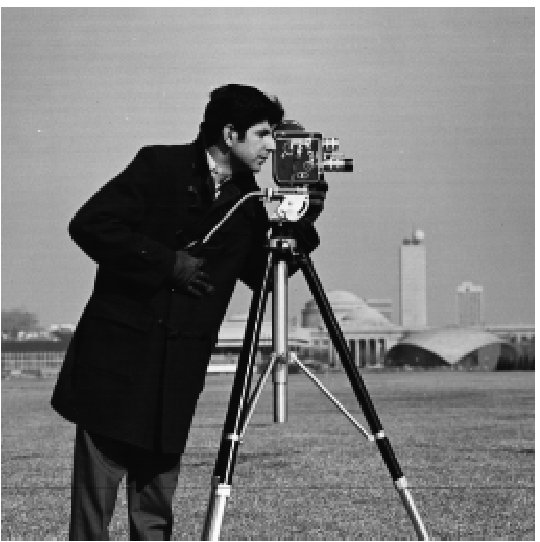

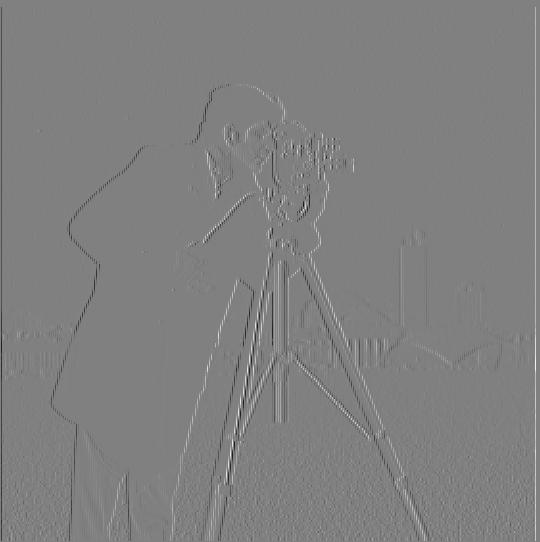

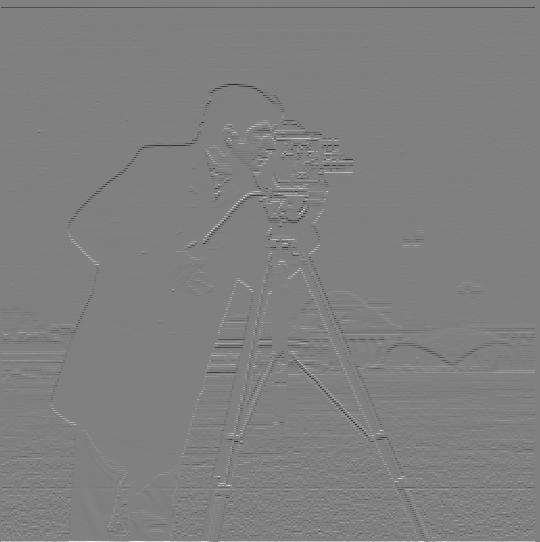

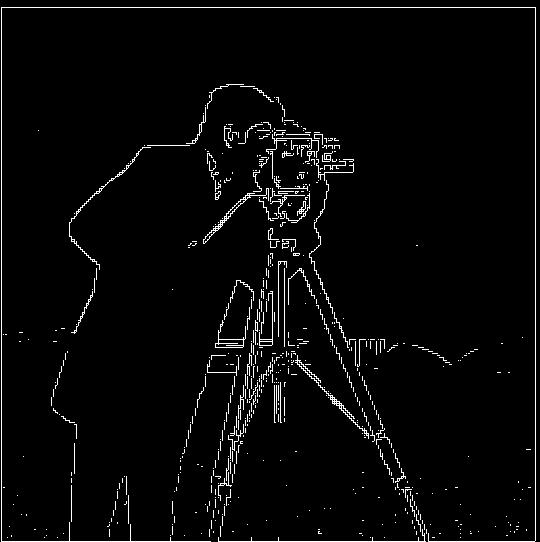

I convolve the cameraman image with D_x = [1, -1] and D_y = [1, -1].T using scipy.signal.convolve2 with mode='same', boundary='symm' to get the partial derivatives. With the partial derivatives computed, I calculate the gradient magnitude by np.sqrt(partial_x**2 + partial_y**2). At the end, I use a threshold of 0.27 to binarize the graident magnitude image.